Recently, I had to connect a NetApp device with our HP blades in c7000 chassis, using a straight connection (meaning no switch in between). It took some significant time to make this thing work, because the connection between a NetApp storage device and HP Virtual Connect is supposed to be configured using a switch, not a straight and direct connection.

Cabling

There are two Virutal Connect modules in the HP c7000 chassis and two dual-head 10Gb NICs in each NetApp controller. Each Virtual Connect module is using ports x7 and x8. This is how the ports are connected.

- Port x7 on the first VC1 (Virtual Connect) module is connected to the NIC e3b on the first controller with a blue FC cable

- Port x8 on the first VC1 module is connected to the NIC e3b on the second controller with a yellow FC cable

- Port x7 on the second VC2 module is connected to the NIC e3a on the first controller with a green FC cable

- Port x8 on the second VC2 module is connected to the NIC e3a on the second controller with a gray FC cable

NOTE: When looking the NetApp controllers, NIC e3b is on left and NIC e3a is on the right side, as shown on the image above.

NetApp configuration

This is how the NICs are configured on the first controller. In case something goes wrong, these lines must be in /etc/rc on each controller. Otherwise, when the controllers are rebooted, these configuration changes will be lost.

ifconfig e3a down ifconfig e3b down ifgrp create single Trunk10 e3a e3b vlan create Trunk10 10 ifconfig Trunk10-10 192.168.10.1 netmask 255.255.255.0 partner 192.168.11.1 ifgrp favor e3b ifconfig Trunk10-10 up

This is how the NICs are configured on the second controller.

ifconfig e3a down ifconfig e3b down ifgrp create single Trunk11 e3a e3b vlan create Trunk11 11 ifconfig Trunk11-11 192.168.11.1 netmask 255.255.255.0 partner 192.168.10.1 ifgrp favor e3a ifconfig Trunk11-11 up

If you need to rebuild the configuration, first delete the vlan, and then destroy the group.

ifconfig Trunk10-10 down vlan delete Trunk10 10 ifgrp destroy Trunk10

To see the config, execute ifconfig –a.

Virtual Connect configuration

The Virtual Connect module can be managed from http://<IP_of_the_Virtual_Connect>

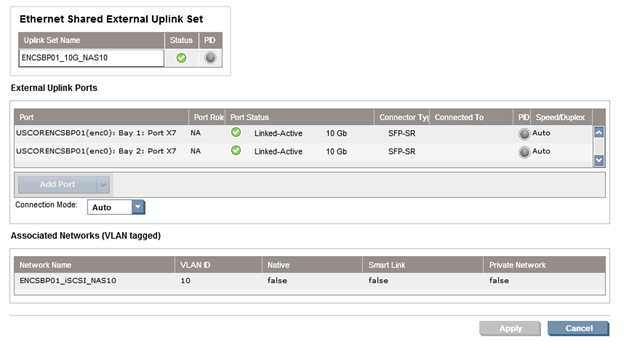

There are two Shared External Uplink Sets defined.

- ENCSBP01_10G_NAS10

- ENCSBP01_10G_NAS11

If these sets need to be recreated, follow the screenshots below. This means that ports x7 from both VC1 (Bay 1) and VC2 (Bay 2) are defined as one external network card. The same applies for ports x8.

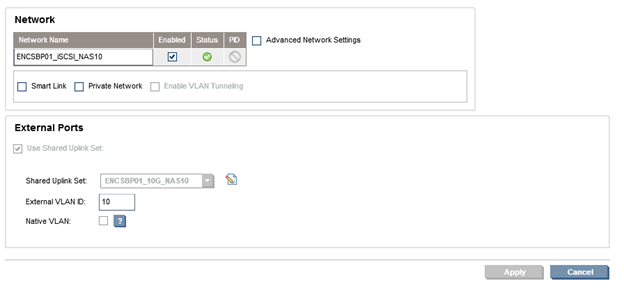

In addition to the Shared Uplink Sets, there are two networks defined.

- ENCSBP01_iSCSI_NAS10

- ENCSBP01_iSCSI_NAS11

The first network is associated with the first Shared Uplink Set and External VLAN ID 10, the second network is associated with the second Shared Uplink Set and External VLAN ID 11.

NOTE: It is important to match the VLAN IDs (10 and 11) on the NetApp controller and the networks defined in Virtual Connect.

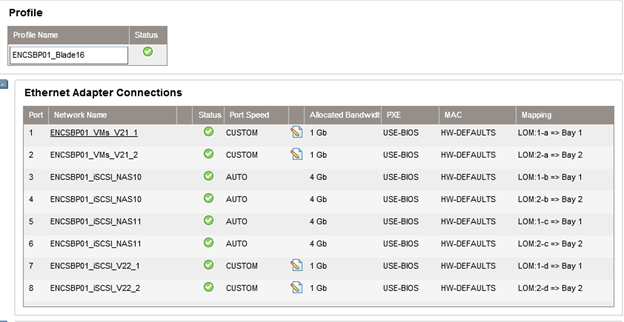

Finally, when creating a profile for a server (ESXi or Windows), the following NICs should be assigned.

This means, that the first/second NIC are on VLAN21 from a different Shared Uplink Set. NIC from 3 to 6 are the ones used for iSCSI, and the last two NICs are used for the old iSCSI configuration and VLAN22 servers. Make sure that NICs 1 and 2 and 7 and 8 are set as CUSTOM port speed of 1Gb. That way, we’ll increase the iSCSI (NICs 3-6) to 4Gb.

MS Windows configuration

Depending on the subnet that MS Windows is going to use for a public IP, use NIC1 and NIC2 if the server is going to be on the 128.202.21.0/24 subnet and NIC7 and NIC8 if the server is going to be on the 128.202.22.0/24 subnet. These NIC pairs should be teamed using the provided HP Network Team Configuration utility. Your IP subnets may vary.

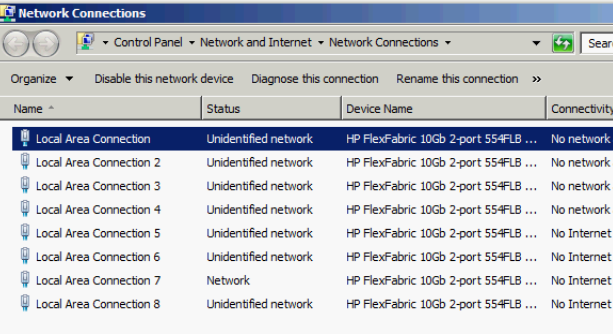

When dealing with MS Windows, it is unclear what NIC under Network Connections corresponds to the NICs under Virtual Connect (see previous screenshot).

In order to identify them we need to use ipconfig /all in Windows and then go to https://Chassis_IP, select the server name from the Device Bays on the left and click on the information tab.

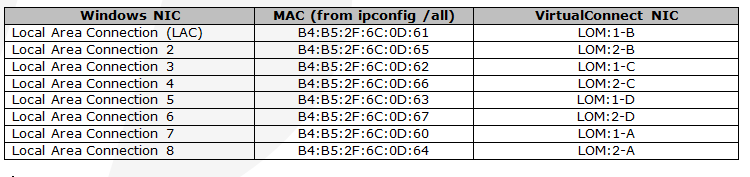

Based on this information, you can map the NICs.

If we look at the screenshot from the Virtual Connect server profile, we need to team:

- 1A and 2A (LAC 7 and LAC 8 ) for a public NIC on 128.202.21.0/24 network or whatever your public IP for servers is.

- 1B and 2B (LAC and LAC 2) for iSCSI NIC team on the first NetApp controller

- 1C and 2C (LAC 3 and LAC 4) for iSCSI NIC team on the second NetApp controller

- 1D and 2D (LAC 5 and LAC 6) for another IP subnet that you might use

ESX/ESXi configuration

When building an ESXi host, follow the screenshots below. vmnic0 and vmnic1 should be used for the Management Network and VM NET 21 network (servers with IP range 128.202.21.0/24) or whatever your IP subnet for servers is.

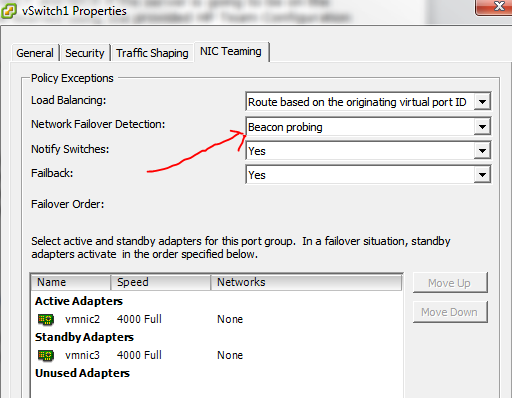

The iSCIS NICs are vmnic2, vmnic3 for the first controller and vmnic4, vmnic5 for the second controller. They MUST be in active/standby mode, otherwise the connection won’t work.

In addition, the Network Failoved Detection MUST be set to Beacon probing for both vSwitch1 and vSwitch2, otherwise the failover won’t work.

Troubleshooting failover

The easiest way to validate the failover is to simulate a Virtual Connect crash. For that reason, we’ll ping constantly the first and the second controller’s IP for iSCSI (192.168.10.1 and 192.168.11.) and unplug both cables from VC2 module.

5 comments

I have a very similar configuration that I’m trying to put together. The differences are that I have two enclosures connected via Virtual Connect. We are running the flex-10 modules in bays 1&2 of both enclosures. Would there be a compelling reason to use the x7&x8 interfaces on them? With our layout, we are looking at doing NFS and CIFS over the 10G links. Do you still think that splitting the shared uplinks into two groups is necessary? Any advise you can provide would be greatly appreciated. Thanks.

I forgot to tell that all my other ports (x1-x6) were already taken, so I had to use port x7 and port x8. You can use any ports available. As a matter of fact, HP recommends x7 and x8 for inter-chassis communication, something that you already have in place. As for splitting the connection, that was the only way of making this thing work. I tried to use one SUS encompassing both VirtualConnects but it didn’t work. I tried using probably all posible LACP/non-LACP combinations on the NetApp and the VirtualConnect and it didn’t work. Simply, I wasn’t able to ping or there was no redundancy. This is the only config that gives me both. If you find a better solution, let me know. Life would be much easier if there was a switch in between. :)

I would like to thank you for your blog post. We followed your configuration as closely as possible (minus IP addressing and vlan ids). I ended up having the e3a and e3b mixed up as to what was cabled where on the VC side. Since we are stacking two enclosures, we split the connections between the two chassis and created the SUS’s so controller A’s connections were one SUS and controller B’s connections were a second SUS. Since I only have a single port on the blade usable for this traffic, I set LOM2-a to multiple networks and specified both ethernet networks. Then on the vmware side, I created another vSwitch and created vmkernel port groups for both networks. Since we also have a requirement to do CIFS to the VM, I created two virtual machine port groups (one with each vlan). Then on the VM side, I added a nic for each portgroup. Once a static IP address was assigned that was on the same subnet as the Netapp, CIFS came right up.

Great post, I am facing the same problem and try to connect a FAS2240 directly on the Flex10 of a C7000.

Am I wrong, or the only bad thing with this setup is that we can’t do ISCSI Multipath ?

Causse in this setup if I understand correctly each NetApp controller have ISCSI IP in only one vlan …

Correct. You won’t have multipath.