In one of my previous posts, I wrote about accessing DynamoDB and AWS Gateway/Lambda using IAM roles and secrets from EC2/EKS Node.js application. The idea is to reference passwords in your code and never store and secrets/passwords in the code. By using roles, we can access the secrets from the AWS Secrets Manager and use them dynamically in the code. However, that solution has its own pros and cons. The pros are that you don’t have to do anything extra except creating an IAM role and secrets. The cons are that you need AWS SDK as part of your application. You will use AWS SDK for Node.js to get the credentials from the Secrets Manager. This means you have to maintain up-to-date AWS SDK and add extra code. Another way is to use the k8s CSI driver and ASCP (provider) that pretty much gets the secret and mounts it as a file. You still need IAM roles to access the Secrets Manager but you don’t need AWS SDK. You can read the secrets as you read a file.

Table of Contents

Create an EKS cluster

We’ll create an EKS cluster using the eksctl tool.

CLUSTER_NAME="eksDemoCluster" REGION="us-east-2" NAMESPACE="default" eksctl create cluster --name $CLUSTER_NAME \ --region $REGION --version 1.28 \ --node-type t3.small --nodes 2

We’ll use helm to install the CSI driver and the specific ASCP provider for AWS.

helm repo add secrets-store-csi-driver https://kubernetes-sigs.github.io/secrets-store-csi-driver/charts helm repo add aws-secrets-manager https://aws.github.io/secrets-store-csi-driver-provider-aws helm repo update helm install -n kube-system csi-secrets-store secrets-store-csi-driver/secrets-store-csi-driver helm install -n kube-system secrets-provider-aws aws-secrets-manager/secrets-store-csi-driver-provider-aws

Check if everything is OK.

kubectl --namespace=kube-system get pods -l "app=secrets-store-csi-driver" NAME READY STATUS RESTARTS AGE csi-secrets-store-secrets-store-csi-driver-nhq7b 3/3 Running 0 19s csi-secrets-store-secrets-store-csi-driver-tft6q 3/3 Running 0 19s

Create a secret

Let’s create a secret that we’ll be using in a mock-up app.

SECRET_NAME="demoapp/mysecret"

SECRET_ARN=$(aws secretsmanager create-secret --name $SECRET_NAME \

--secret-string '{"username":"Administrator", "password":"SuperSecretPassword$"}' \

--output text --query 'ARN' --region $REGION)

echo $SECRET_ARN

arn:aws:secretsmanager:us-east-2:123456789012:secret:demoapp/mysecret-lboyfd

Create an IAM policy

We also need to define an IAM policy that will allow our EKS pods to access only this particular secret and nothing else.

cat << EOF > polDemoSecret.json

{

"Version": "2012-10-17",

"Statement": [ {

"Effect": "Allow",

"Action": ["secretsmanager:GetSecretValue", "secretsmanager:DescribeSecret"],

"Resource": ["$SECRET_ARN"]

} ]

}

EOF

Create the policy.

POLICY_ARN=$(aws iam create-policy --policy-name polDemoSecret \ --policy-document file://polDemoSecret.json \ --output text --query 'Policy.Arn') echo $POLICY_ARN arn:aws:iam::123456789012:policy/polDemoSecret

Create an IAM OIDC provider

Let’s determine whether we have an existing IAM OIDC provider for our cluster.

aws eks describe-cluster --name $CLUSTER_NAME --region $REGION \ --query "cluster.identity.oidc.issuer" --output text https://oidc.eks.us-east-2.amazonaws.com/id/D7FAD6F2BEF430FC1CB673777A9E4FED

When we provisioned the cluster with eksctl, the OIDC was created automatically. We need the ID of the OIDC which is the hex value at the end.

OIDCID=$(aws eks describe-cluster --name $CLUSTER_NAME --region $REGION \ --query "cluster.identity.oidc.issuer" --output text | cut -d '/' -f 5) echo $OIDCID E9B5E80C37A71FA3932B94EFF5BA3437

Check if the IAM OIDC provider is already configured. It shouldn’t if you provisioned a brand new cluster.

aws iam list-open-id-connect-providers | grep $OIDCID

If for whatever reason, you had an output from the command above, skip this associate-iam-oidc-provider step below.

If you don’t have any output, associate the OIDC provider with the cluster.

eksctl utils associate-iam-oidc-provider --cluster $CLUSTER_NAME --region $REGION --approve 2023-12-22 12:27:47 [ℹ] will create IAM Open ID Connect provider for cluster "eksDemoCluster" in "us-east-2" 2023-12-22 12:27:47 [✔] created IAM Open ID Connect provider for cluster "eksDemoCluster" in "us-east-2"

Cluster service account

We also need a service account for the cluster and we need to attache the policy for the secrets that we created before.

SA_NAME="sademosecret" eksctl create iamserviceaccount --name $SA_NAME --namespace $NAMESPACE \ --cluster $CLUSTER_NAME --region $REGION \ --attach-policy-arn $POLICY_ARN --approve --override-existing-serviceaccounts kubectl get sa

Service Provider Class

…and finally we need a service provider class that we’ll deploy on the EKS cluster.

cat << EOF > spc.yaml

apiVersion: secrets-store.csi.x-k8s.io/v1

kind: SecretProviderClass

metadata:

name: spc-demosecret

namespace: $NAMESPACE

spec:

provider: aws

parameters:

objects: |

- objectName: "$SECRET_NAME"

objectType: "secretsmanager"

EOF

kubectl apply -f spc.yaml

Test with busybox pod

Create this YAML file that will deploy a pod. Pay attention to highlighted lines. We have to provide the namespace, the service account and the service provider class that specifies the secret.Check this link on how to mount and specify different secrets.

cat << EOF > app.yaml

apiVersion: v1

kind: Pod

metadata:

name: busybox

namespace: $NAMESPACE

spec:

serviceAccountName: $SA_NAME

volumes:

- name: secretsvolume

csi:

driver: secrets-store.csi.k8s.io

readOnly: true

volumeAttributes:

secretProviderClass: "spc-demosecret"

containers:

- image: public.ecr.aws/docker/library/busybox:1.36

command:

- sleep

- "3600"

imagePullPolicy: IfNotPresent

name: busybox

volumeMounts:

- name: secretsvolume

mountPath: "/mnt/secrets-store"

readOnly: true

restartPolicy: Always

EOF

kubectl apply -f app.yaml

Test the secrets retrieval. As you can see, the secret that we created was demoapp/mysecret, but in this case the forward slash would mean a directory, so the actual secret is demoapp_mysecret… and it’s simple as that. The secret is just a file under the /mnt/secrets-store directory.

kubectl exec -it $(kubectl get pods | awk '/busybox/{print $1}' | head -1) -- cat /mnt/secrets-store/demoapp_mysecret; echo

{"username":"Administrator", "password":"SuperSecretPassword$"}

Node.js app

Create a folder and add this file. Save it as server.js.

'use strict';

const express = require('express')

const app = express()

const port = 3000

var fs = require('fs');

try {

var data = fs.readFileSync('/mnt/secrets-store/demoapp_mysecret', 'utf8');

console.log(data.toString());

} catch(e) {

console.log('Error:', e.stack);

}

app.get('/', (req, res) => {

res.send(JSON.stringify(data));

})

app.listen(port, () => {

console.log(`Example app listening on port ${port}`)

})

Let’s create the Dockerfile so we can build the image.

FROM node:20 # Create app directory WORKDIR /usr/src/app # Install app dependencies # A wildcard is used to ensure both package.json AND package-lock.json are copied # where available (npm@5+) COPY package*.json ./ RUN npm install # If you are building your code for production # RUN npm ci --only=production # Bundle app source COPY . . EXPOSE 3000 CMD [ "node", "server.js" ]

Create a .dockerignore file.

node_modules npm-debug.log

Now, we can create the image and push to Dockerhub or some other image repo. Use your own repo here at line 1.

sudo docker build . -t klimenta/secretsdemo sudo docker images sudo docker push klimenta/secretsdemo

Create a file to deploy 2 replicas and a load balancer. Save it as something.yaml and deploy it. Analyze the file and see how we use the service account, secret provider class and the image.

apiVersion: apps/v1

kind: Deployment

metadata:

name: demosecret

namespace: default

spec:

replicas: 2

selector:

matchLabels:

run: demosecret

template:

metadata:

labels:

run: demosecret

spec:

serviceAccountName: sademosecret

volumes:

- name: secretsvolume

csi:

driver: secrets-store.csi.k8s.io

readOnly: true

volumeAttributes:

secretProviderClass: "spc-demosecret"

containers:

- name: demosecret

image: klimenta/secretsdemo

volumeMounts:

- name: secretsvolume

mountPath: "/mnt/secrets-store"

readOnly: true

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: loadbalancer

annotations:

service.beta.kubernetes.io/aws-load-balancer-type: nlb

service.beta.kubernetes.io/aws-load-balancer-nlb-target-type: ip

service.beta.kubernetes.io/aws-load-balancer-scheme: internet-facing

spec:

ports:

- port: 80

targetPort: 3000

protocol: TCP

type: LoadBalancer

selector:

run: demosecret

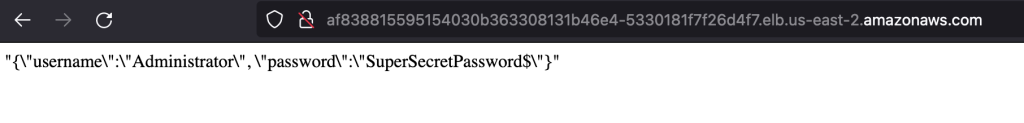

Once you deploy with kubectl apply -f something.yaml, type kubectl get svc and you’ll see a public URL.

If you go to that URL, you’ll see our secret.