In this post I’ll explain how to install MetalLB which is a load balancer for bare metal/VM Kubernetes servers. If you have your own home lab to play with Kubernetes and you are not using AKS, EKS or GKE, then this post is for you.

The prerequisite is to have a running Kubernetes cluster. In my earlier post, I’ve described how to install Kubernetes with CRI-O and Cilium as a CNI, so you can follow that post or feel free to have your own install. Mind that if you use other CNIs such as Calico or Weave, there are some things you have to check first and see if it applies to you. Look at this link.

We’ll install MetalLB now. You probably have your k8s master and the nodes ready.

Table of Contents

Install MetalLB

Do this first on the master.

kubectl edit configmap -n kube-system kube-proxy

…and change strictARP to true.

apiVersion: kubeproxy.config.k8s.io/v1alpha1 kind: KubeProxyConfiguration mode: "ipvs" ipvs: strictARP: true

Deploy MetalLB.

kubectl apply -f https://raw.githubusercontent.com/metallb/metallb/v0.13.11/config/manifests/metallb-native.yaml

You’ll see the pods running in metallb-system namespace.

kubectl get pods -n metallb-system NAME READY STATUS RESTARTS AGE controller-7d56b4f464-gkfrq 1/1 Running 0 55s speaker-l5954 1/1 Running 0 55s speaker-xrswp 1/1 Running 0 55s speaker-z8vdt 1/1 Running 0 55s

Let’s configure our load balancer to give IPs in this 192.168.1.50-192.168.1.60 range. Change to your needs accordingly in line 8.

Save it as a lb-config.yaml file.

apiVersion: metallb.io/v1beta1 kind: IPAddressPool metadata: name: first-pool namespace: metallb-system spec: addresses: - 192.168.1.50-192.168.1.60 --- apiVersion: metallb.io/v1beta1 kind: L2Advertisement metadata: name: example namespace: metallb-system

Deploy the config.

kubectl apply -f lb-config.yaml

Now, we can deploy a simple app and a load balancer. Save the file as demo.yaml.

This is an app that I made in Node.js that listens on port 3000 to display the IP of the pod that the load balancer hits.

You can use it for any type of a load balancer, not just MetalLB. It’s a super simple app that prints the IP of the pod where it’s running.

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo

spec:

replicas: 6

selector:

matchLabels:

run: demo

template:

metadata:

labels:

run: demo

spec:

containers:

- name: demo

image: klimenta/serverip

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: loadbalancer

spec:

ports:

- port: 80

targetPort: 3000

protocol: TCP

type: LoadBalancer

selector:

run: demo

Deploy the app.

kubectl apply -f demo.yaml

Check the load balancer service.

kubectl get svc NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 33m loadbalancer LoadBalancer 10.106.36.58 192.168.1.50 80:30770/TCP 4m33s

…and if you go to that IP (192.168.1.50) in your browser, you’ll see the IP of the pods that the load balancer hits.

Install nginx ingress reverse proxy

The problem with the scenario above is that for every service you need to expose, you need a different IP from the IP pool that we’ve assigned to MetalLB (.50-.60).

A better solution is to install an nginx ingress that will act as a reverse proxy, but for this you’ll need a working DNS server as the services will share the same IP, but different FQDN.

So, in practice it looks like this.

Install nginx ingress first.

kubectl apply -f https://raw.githubusercontent.com/kubernetes/ingress-nginx/controller-v1.8.2/deploy/static/provider/cloud/deploy.yaml kubectl get pods -n ingress-nginx kubectl get service ingress-nginx-controller -n=ingress-nginx

Don’t worry if the two nginx ports are not running and it says – “completed”.

You’ll see the external IP that’s assigned to nginx from MetalLB.

For some reason, nginx ingress won’t work without this line. Many people have this problem in a bare-metal environment and this is the workaround.

kubectl delete -A ValidatingWebhookConfiguration ingress-nginx-admission

Now, let’s create an nginx web server. This has nothing to do with nginx ingress, it’s completely separate.

I am exposing the nginx deploymenty and creating a rule that will hit the deployment on port 80 with nginx.homelab.local as FQDN.

NOTE: If your browser can’t resolve this URL, it won’t work. So, make sure you have a DNS or /etc/hosts file or some type of name resolution.

kubectl create deployment nginx --image=nginx --port=80 kubectl expose deployment nginx kubectl create ingress nginx --class=nginx --rule nginx.homelab.local/=nginx:80

Then, let’s install Apache server that runs on the same IP and same port 80.

kubectl create deployment httpd --image=httpd --port=80 kubectl expose deployment httpd kubectl create ingress httpd --class=nginx --rule httpd.homelab.local/=httpd:80

If everything is OK, you’ll be able to access both web servers using their URLs.

If you want to put Grafana and/or KubeCost behind nginx ingress, read these short posts here and here.

If you want to use yaml for deployment, here is an example of that. It creates 6 replicas of a pod that prints the IP where the pod is running. The service runs on port 3000. Then it creates a ClusterIP on the same port and puts the ClusterIP behind the ingress. Change the hostname at line 39.

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo

spec:

replicas: 6

selector:

matchLabels:

run: demo

template:

metadata:

labels:

run: demo

spec:

containers:

- name: demo

image: klimenta/getmyip

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: demo

spec:

type: ClusterIP

ports:

- port: 3000

targetPort: 3000

selector:

run: demo

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

spec:

rules:

- host: demo.homelab.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: demo

port:

number: 3000

ingressClassName: nginx

SSL certificates

The examples above allow you to run multiple websites behind a reverse proxy on port 80, but in reality you want everything on port 443 and with SSL certificates. I’ll describe two scenarios: with a valid cert and self-signed certificate.Fortunately, nginx ingress listens on port 443 and does automatic redirect from http->https so you don’t have to worry about any changes.

Valid SSL certificate

You will need a valid SSL certificate for this. And you will need the private key, the certificate, the CA root certificate and the intermediate certificate. You can get these from the vendor where you purchased the certificate. In my case, I’ll have the private key saved as domain.key, the certificate as domain.pem, the root CA certificate as domain.ca and the intermediate certificate as domain.inter.

IMPORTANT: nginx expects the certificate chain in exact order: PEM -> INTERMEDIATE -> ROOT CA. If you have just the private key and the certificate (without inter and root CA), it might work, but you might get some errors when using curl, such as that the certificate authority cannot be recognized.

So, create a file called domain.full.pem or whatever that has all 3 certificates in that order, e.g. cat domain.pem domain.inter domain.ca > domain.full.pem.

If you received a bundle file from the certificate vendor, create a chain from two files: domain.pem plus the bundle file. The bundle file contains the intermediate and the root certificate.

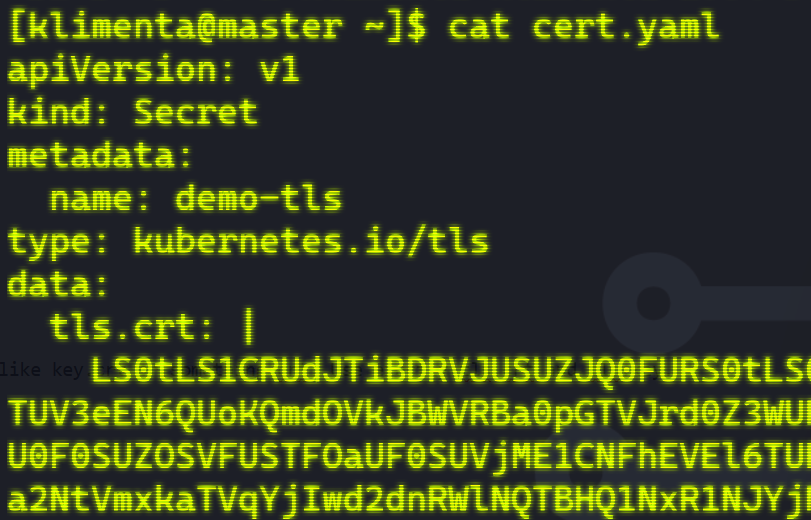

Now that you have these files, you need to create a TLS secret in Kubernetes where you’ll store your certificate. You can do that with kubectl or with a YAML file.

kubectl create secret tls demo-tls --key domain.key --cert domain.full.pem

Keep the secret, ingress and the deployment in the same namespace.

If you use YAML, which most likely you’ll do if you use automation, you’ll have to encode the certificates.

This is the template YAML file. Name it as tls.yaml.

apiVersion: v1

kind: Secret

metadata:

name: demo-tls

type: kubernetes.io/tls

data:

tls.crt: |

<insert_base64_string>

tls.key: |

<insert_base64_string>

Don’t change data keys tls.crt and tls.key. You have to use them, not something like key.crt or something.crt. Use exactly tls.crt and tls.key.

Encode the certificate with base64.

base64 -w0 domain.full.pem

Encode the key with base64.

base64 -w0 domain.key

You’ll get two long strings that start with LS0…Copy & paste these strings into YAML and make sure they are properly indented.

Now, you can create the secret.

kubectl apply -f tls.yaml

All yopu have to do now is to reference the TLS secret that we created. Compare the highlighted lines in this YAML with the previous one.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

spec:

tls:

- hosts:

- demo.iandreev.com

secretName: demo-tls

rules:

- host: demo.iandreev.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: demo

port:

number: 3000

ingressClassName: nginx

Change the hostname in line 11 and 14 to match your cert and you can deploy the app.

Self-signed certificates

First, we have to create a Certificate Authority (CA). I’ll use homelab.local as my domain.

openssl req -x509 -nodes -sha512 -days 365 -newkey rsa:4096 \ -keyout rootCA.key -out rootCA.crt -subj "/C=US/CN=HomeLab Certificate Authority"

You’ll get two files, rootCA.key (the private key) and rootCA.crt.

Create a private key for the wildcard homelab.local domain and a certificate signing request file.

You’ll get two files, star.homelab.local.key (the private key for the domain) and star.homelab.local.csr (the certificate signing request).

openssl req -new -nodes -sha512 -days 365 -newkey rsa:4096 \ -keyout star.homelab.local.key -out star.homelab.local.csr -subj "/C=US/ST=NJ/L=Trenton/O=HomeLab Org/CN=*.homelab.local"

Create this file where you’ll specify your domain name and eventually your IP, but the IP is not necessary.

cat << EOF > v3.ext authorityKeyIdentifier=keyid,issuer basicConstraints=CA:FALSE keyUsage = digitalSignature, nonRepudiation, keyEncipherment, dataEncipherment subjectAltName = @alt_names [alt_names] DNS.1 = *.homelab.local IP.1 = 192.168.1.50 EOF

Sign the certificate signing request and create the certificate file. You’ll get one certificate file star.homelab.local.crt.

openssl x509 -req -sha512 -days 365 -in star.homelab.local.csr -CA rootCA.crt -CAkey rootCA.key \ -CAcreateserial -out star.homelab.local.crt -extfile v3.ext

Combine the certificate and the CA certificate in a single file.

cat star.homelab.local.crt rootCA.crt > star.homelab.local.chain.crt

Create this tls.yaml template file.

apiVersion: v1

kind: Secret

metadata:

name: demo-tls

type: kubernetes.io/tls

data:

tls.crt: |

<insert_base64_string>

tls.key: |

<insert_base64_string>

Encode both the domain certificate chain file and the domain private key file.

base64 -w0 star.homelab.local.chain.crt base64 -w0 star.homelab.local.key

You’ll get two very long single lines. Add these two lines to the tls.yaml template file but make sure they are properly indented (see the screenshot in the previous section).

Now, you can create the secret.

kubectl apply -f tls.yaml

You can also use kubectl to create the secret, see the previous section.

Save this file as demo-local.yaml and deploy it using kubectl apply -f demo-local.yaml.

apiVersion: apps/v1

kind: Deployment

metadata:

name: demo

spec:

replicas: 6

selector:

matchLabels:

run: demo

template:

metadata:

labels:

run: demo

spec:

containers:

- name: demo

image: klimenta/getmyip

ports:

- containerPort: 3000

---

apiVersion: v1

kind: Service

metadata:

name: demo

spec:

type: ClusterIP

ports:

- port: 3000

targetPort: 3000

selector:

run: demo

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: ingress-demo

spec:

tls:

- hosts:

- demo.homelab.local

secretName: demo-tls

rules:

- host: demo.homelab.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: demo

port:

number: 3000

ingressClassName: nginx

You’ll be able to go to your browser and check demo.homelab.local and see the page after the SSL warning.

But if you double-click the rootCA.crt file on your local machine, you can import the certificate in the Trusted Root Certificates store and you won’t get that warning anymore.