In this post, I’ll explain how to create a whole environment consisting of a new VPC, two public subnets, two private subnets, a bastion host and two application hosts that run nginx web server. These application hosts will be behind an application load balancer. The purpose of the bastion host in the public subnet is to be able to access the application hosts which are in the private subnet and have no public access directly. We’ll use the official CentOS 7 image from the marketplace, make some modifications with packer and bake our own image that has a local ansible. Once these servers are deployed, they’ll already have nginx installed and started and a fully functional template website from GitHub (courtesy of free-css.com). This is how it looks like in a diagram.

Before you start, I suggest you create a directory where you are going to place all these files. Make sure to execute each command from that directory unless told otherwise.

Table of Contents

Packer and ansible

Packer is super easy to install. Just get the executable and place it somewhere. Once ready to create an image, specify your AWS access key and secret key. The IAM associated with these keys should be able to create an AMI. This is my packer file.

{

"variables": {

"aws_access_key": "",

"aws_secret_key": "",

"ami_name": "centos7-USWTA",

"region": "us-east-1",

"source_ami": "ami-02eac2c0129f6376b",

"instance_type": "t2.micro"

},

"builders": [{

"access_key": "{{user `aws_access_key`}}",

"secret_key": "{{user `aws_secret_key`}}",

"ami_name": "{{user `ami_name`}}.{{timestamp}}",

"region" : "{{user `region`}}",

"source_ami": "{{user `source_ami`}}",

"instance_type": "{{user `instance_type`}}",

"ssh_username": "centos",

"type": "amazon-ebs"

}],

"provisioners": [

{

"type": "shell",

"inline": "sudo yum -y install epel-release"

},

{

"type": "shell",

"inline": "sudo yum -y install ansible"

},

{

"type": "file",

"source": "ansible.yml",

"destination": "/home/centos/ansible.yml"

},

{

"type": "ansible-local",

"playbook_file": "ansible.yml"

}

]

}

You can specify your access keys in the script, but you don’t want to. You can specify the name of the AMI image, the region and the instance type. Do not change the source AMI variable, that’s the id of the CentOS official image. In the provisioners section, we’ll install the epel repo so we can install ansible. In addition, packer will get a local ansible playbook and place it under /home/centos in the new image. So, you need the ansible.yml playbook in the same directory as packer.json file. This is the ansible playbook. You definitely won’t do it this way in production. In my case I rename the original HTML root and clone a github repo. You’ll probably want to clone the site in a separate directory and use a modified nginx.conf file that suits your needs.

---

- hosts: localhost

become: true

tasks:

- name: install nginx

yum:

name: nginx

state: present

- name: start nginx

service:

name: nginx

state: started

enabled: yes

- name: install git

yum:

name: git

state: present

- name: remove the original html

shell: |

mv /usr/share/nginx/html /usr/share/nginx/html.old

- name: clone web template

git:

repo: https://github.com/klimenta/webtemplate

dest: /usr/share/nginx/html

version: master

This playbook installs nginx, git and clones a website template from my github account. So, with both files ready (ansible.yml and packer.json), you can create your own image. Replace your own access key and secret and run this command.

packer build -var 'aws_access_key=AKIA.....' -var 'aws_secret_key=Dp123Sm4.....' packer.json

It takes about 5-6 minutes to bake the AMI. If you go to your AWS account, you’ll see it there.

With our image ready, we are ready to deploy the infrastructure environment using Terraform. But before we do that, we want to create the keys that we will use to log in to our servers. In a production environment you’ll choose to have separate keys for the bastion host and the app hosts, but for the sake of clarity, we’ll use one key to rule them all.

Type this command and hit Enter twice to skip the passphrase.

ssh-keygen -t rsa -f $PWD/keyUSWTA -b 2048

You’ll have two files, keyUSWTA and keyUSWTA.pub. keyUSWTA is your private key, don’t share it with anyone. The public key will be used to spin-up the instances from our image. If you use putty instead of ssh, you have to convert your private key in a ppk format.

Terraform

Terraform is also super easy to install. Just copy the file somewhere in your path and you are ready to go. For Terraform we’ll have 4 separate files to build the infra. The first file is variables.tf. This is where we define all the variables that are in use. The second file is terraform.tfvars. This file is where we assign values to our variables. The first file can go to GitHub or any other version control system, the second one can not, especially if you have passwords and keys there. But it’s up to you. The third file is outputs.tf. Terraform dumps the values of this file when it’s done provisioning. For example, you want to see what’s your external IP from the instance you just created instead of going to AWS console to find out. And the fourth file is main.tf where we define our infrastructure. So, here they are.

variables.tf

variable "aws_region" {}

variable "aws_az1" {}

variable "aws_az2" {}

variable "aws_profile" {}

variable "vpc_cidr" {}

variable "sub_private1_cidr" {}

variable "sub_private2_cidr" {}

variable "sub_public1_cidr" {}

variable "sub_public2_cidr" {}

variable "bastion_ami_id" {}

variable "instance_type" {}

variable "ip_address" {}

terraform.tfvars

aws_profile = "default" aws_region = "us-east-1" aws_az1 = "us-east-1a" aws_az2 = "us-east-1b" vpc_cidr = "192.168.200.0/23" sub_public1_cidr = "192.168.200.0/25" sub_public2_cidr = "192.168.200.128/25" sub_private1_cidr = "192.168.201.0/25" sub_private2_cidr = "192.168.201.128/25" bastion_ami_id = "ami-02eac2c0129f6376b" instance_type = "t2.micro" ip_address = "1.2.3.4/32"

This means I’ll use my default profile for AWS CLI, the region for deployment is us-east-1, then the CIDRs for the VPC and the public and private subnets. The bastion host is based on the AMI there, which is CentOS 7 and t2.micro. And that host will have access from 1.2.3.4 IP only. Change to suit your needs.

outputs.tf

output "aws_lb" {

value = aws_lb.albUSWTA.dns_name

}

output "aws_ip" {

value = aws_instance.ec2USWTABastion.public_ip

}

output "aws_app1_ip" {

value = aws_instance.ec2USWTAApplication1.private_ip

}

output "aws_app2_ip" {

value = aws_instance.ec2USWTAApplication2.private_ip

}

These are the values that will be dumped on your screen, once Terraform completes. You’ll see the URL of your load balancer which is how you’ll access your website. Then, the public IP of the bastion host and the private IPs of your app instances in the private subnet.

And finally, this is the main.tf file that provisions the infrastructure. Click the (+) sign to expand.

provider "aws" {

region = var.aws_region

profile = var.aws_profile

}

# VPC

resource "aws_vpc" "vpcUSWTA" {

cidr_block = var.vpc_cidr

tags = {

Name = "vpcUSWTA"

}

}

# Public subnet 1

resource "aws_subnet" "subPublic1" {

vpc_id = aws_vpc.vpcUSWTA.id

cidr_block = var.sub_public1_cidr

availability_zone = var.aws_az1

tags = {

Name = "subPublic - USWTA - 1"

}

}

# Public subnet 2

resource "aws_subnet" "subPublic2" {

vpc_id = aws_vpc.vpcUSWTA.id

cidr_block = var.sub_public2_cidr

availability_zone = var.aws_az2

tags = {

Name = "subPublic - USWTA - 2"

}

}

# Private subnet 1

resource "aws_subnet" "subPrivate1" {

vpc_id = aws_vpc.vpcUSWTA.id

cidr_block = var.sub_private1_cidr

availability_zone = var.aws_az1

tags = {

Name = "subPrivate - USWTA - 1"

}

}

# Private subnet 2

resource "aws_subnet" "subPrivate2" {

vpc_id = aws_vpc.vpcUSWTA.id

cidr_block = var.sub_private2_cidr

availability_zone = var.aws_az2

tags = {

Name = "subPrivate - USWTA - 2"

}

}

# Internet gateway

resource "aws_internet_gateway" "igwUSWTA" {

vpc_id = aws_vpc.vpcUSWTA.id

tags = {

Name = "igwInternetGateway - USWTA"

}

}

# Key pair

resource "aws_key_pair" "keyUSWTA" {

key_name = "Key for USWTA"

public_key = file("${path.module}/keyUSWTA.pub")

}

# Security group for bastion host

resource "aws_security_group" "sgUSWTABastion" {

name = "sgUSWTA - Bastion"

description = "Allow access on port 22 from restricted IP"

vpc_id = aws_vpc.vpcUSWTA.id

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = [var.ip_address]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "Allow access on port 22 from my IP"

}

}

# Security group for application load balancer

resource "aws_security_group" "sgUSWTAALB" {

name = "sgUSWTA - ALB"

description = "Allow access on port 80 from everywhere"

vpc_id = aws_vpc.vpcUSWTA.id

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

cidr_blocks = ["0.0.0.0/0"]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "Allow HTTP access from everywhere"

}

}

# Security group for application hosts

resource "aws_security_group" "sgUSWTAApplication" {

name = "sgUSWTA - Application"

description = "Allow access on ports 22 and 80"

vpc_id = aws_vpc.vpcUSWTA.id

ingress {

from_port = 22

to_port = 22

protocol = "tcp"

cidr_blocks = [var.sub_public1_cidr]

}

ingress {

from_port = 80

to_port = 80

protocol = "tcp"

security_groups = [aws_security_group.sgUSWTAALB.id]

}

egress {

from_port = 0

to_port = 0

protocol = "-1"

cidr_blocks = ["0.0.0.0/0"]

}

tags = {

Name = "Allow access on ports 22 and 80"

}

}

# Get the latest image

data "aws_ami" "image" {

most_recent = true

owners = ["self"]

filter {

name = "name"

values = ["centos7-USWTA*"]

}

}

# ec2 instance - bastion host

resource "aws_instance" "ec2USWTABastion" {

ami = var.bastion_ami_id

instance_type = var.instance_type

key_name = aws_key_pair.keyUSWTA.key_name

vpc_security_group_ids = [aws_security_group.sgUSWTABastion.id]

subnet_id = aws_subnet.subPublic1.id

associate_public_ip_address = true

root_block_device {

delete_on_termination = true

}

tags = {

Name = "ec2USWTA - Bastion"

}

}

# ec2 instance - app host 1

resource "aws_instance" "ec2USWTAApplication1" {

ami = data.aws_ami.image.id

instance_type = var.instance_type

key_name = aws_key_pair.keyUSWTA.key_name

vpc_security_group_ids = [aws_security_group.sgUSWTAApplication.id]

subnet_id = aws_subnet.subPrivate1.id

associate_public_ip_address = false

root_block_device {

delete_on_termination = true

}

tags = {

Name = "ec2USWTA - Application - 1"

}

}

# ec2 instance - app host 2

resource "aws_instance" "ec2USWTAApplication2" {

ami = data.aws_ami.image.id

instance_type = var.instance_type

key_name = aws_key_pair.keyUSWTA.key_name

vpc_security_group_ids = [aws_security_group.sgUSWTAApplication.id]

subnet_id = aws_subnet.subPrivate2.id

associate_public_ip_address = false

root_block_device {

delete_on_termination = true

}

tags = {

Name = "ec2USWTA - Application - 2"

}

}

# Elastic IP for the NAT gateway

resource "aws_eip" "eipUSWTA" {

vpc = true

tags = {

Name = "eipUSWTA"

}

}

# NAT gateway

resource "aws_nat_gateway" "ngwUSWTA" {

allocation_id = aws_eip.eipUSWTA.id

subnet_id = aws_subnet.subPublic1.id

tags = {

Name = "ngwUSWTA"

}

}

# Add route to Internet to main route table

resource "aws_route" "rtMainRoute" {

route_table_id = aws_vpc.vpcUSWTA.main_route_table_id

destination_cidr_block = "0.0.0.0/0"

gateway_id = aws_nat_gateway.ngwUSWTA.id

}

# Create public route table

resource "aws_route_table" "rtPublic" {

vpc_id = aws_vpc.vpcUSWTA.id

tags = {

Name = "rtPublic - USWTA"

}

}

# Add route to Internet to public route table

resource "aws_route" "rtPublicRoute" {

route_table_id = aws_route_table.rtPublic.id

destination_cidr_block = "0.0.0.0/0"

gateway_id = aws_internet_gateway.igwUSWTA.id

}

# Associate public route table with public subnet 1

resource "aws_route_table_association" "rtPubAssoc1" {

subnet_id = aws_subnet.subPublic1.id

route_table_id = aws_route_table.rtPublic.id

}

# Associate public route table with public subnet 2

resource "aws_route_table_association" "rtPubAssoc2" {

subnet_id = aws_subnet.subPublic2.id

route_table_id = aws_route_table.rtPublic.id

}

# Application Load Balancer

resource "aws_lb" "albUSWTA" {

name = "albUSWTA"

internal = false

load_balancer_type = "application"

subnets = [aws_subnet.subPublic1.id, aws_subnet.subPublic2.id]

security_groups = [aws_security_group.sgUSWTAALB.id]

tags = {

Name = "Application Load Balancer for USWTA"

}

}

# Target group

resource "aws_lb_target_group" "tgUSWTA" {

name = "tgUSWTA"

port = "80"

protocol = "HTTP"

vpc_id = aws_vpc.vpcUSWTA.id

health_check {

healthy_threshold = 5

unhealthy_threshold = 2

timeout = 5

path = "/index.html"

port = 80

matcher = "200"

interval = 30

}

}

# Listener

resource "aws_lb_listener" "lisUSWTA" {

load_balancer_arn = aws_lb.albUSWTA.arn

port = "80"

protocol = "HTTP"

default_action {

type = "forward"

target_group_arn = aws_lb_target_group.tgUSWTA.arn

}

}

# Add instance 1 to target group

resource "aws_lb_target_group_attachment" "tgaUSWTA1" {

target_group_arn = aws_lb_target_group.tgUSWTA.arn

target_id = aws_instance.ec2USWTAApplication1.id

port = "80"

}

# Add instance 2 to target group

resource "aws_lb_target_group_attachment" "tgaUSWTA2" {

target_group_arn = aws_lb_target_group.tgUSWTA.arn

target_id = aws_instance.ec2USWTAApplication2.id

port = "80"

}

The comments tell you what is being done. Terraform has a great documentation so if you google any of the resources created you’ll see ample documentation. So, make changes to suit your needs and once you have everything ready initialize the terraform so it downloads the necessary plugins.

terraform init

Pay special attention to lines 68-72, it expects the keys to be the same exact filename and 156-164 it looks for an image that matches that name. That’s the AMI that we create with packer. Then create the infra. You have to type yes to proceed.

terraform apply

Once completed (in my case it took 4 minutes and 30 seconds), you’ll see the variables from the outputs.tf.

Apply complete! Resources: 25 added, 0 changed, 0 destroyed. Outputs: aws_app1_ip = 192.168.201.91 aws_app2_ip = 192.168.201.187 aws_ip = 54.145.191.102 aws_lb = albUSWTA-1695850209.us-east-1.elb.amazonaws.com

Go to http://albUSWTA-1695850209.us-east-1.elb.amazonaws.com which is the output from the LB from above and voila, your website is up and running on two HA servers.

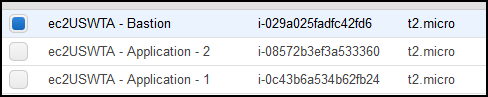

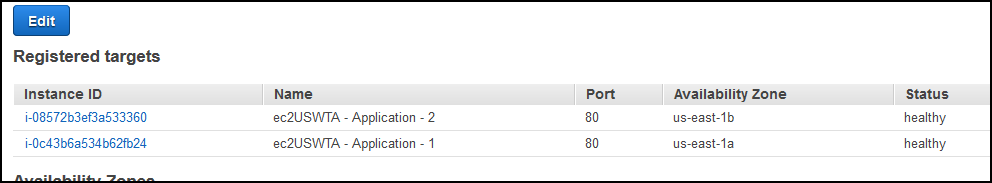

Go to your AWS console and you’ll see all of your resources there. e.g. the instances and the targets behind the load balancers.

Once you are done playing, destroy the resources.

terraform destroy